Breaking the fourth wall of an interview

Shreyas Prakash

A group of men eating ice cream during peak London summer started drowning in large numbers.

As there was a huge number of men eating ice cream who drowned, it was concluded that eating ice cream led to drowning.

This did sound absurd to the researchers investigating this curious case of the missing link between ice cream and men drowning. However, upon closer investigation, it became evident that this was a classic case of a confounding variable at play. The actual factor influencing both the increased ice cream consumption and the rise in drowning incidents was the hot summer weather.

People were more likely to eat ice cream and swim during the summer, hence the correlation. This illustrates how confounding variables can mislead us in everyday life. A more relevant example is in the hiring process, where a candidate excels in interviews but performs poorly on the job. Here, the ‘experience of attempting interviews’ acts as the confounding variable.

Candidates who frequently attend interviews become adept at navigating them, which doesn’t necessarily reflect their actual job performance.

These ‘interview hackers’ develop strong interviewing skills that overshadow their actual professional abilities.

The act of assessing quality candidates has got particularly harder now as we’re living in a post-GPT world where LLMs are getting increasingly better at drafting product strategies, writing PRDs, and critically thinking through various scenarios that are usually led end-to-end by product managers.

Help me with an AI experiment: Which of these two is the better answer for the task of developing a product strategy? Vote 🅰️ (left) or 🅱️ (right) in the comments.

Bonus: Let me know if you think the one you chose was AI 🤖 or human 🙍. pic.twitter.com/YfcFMbY3QO

— Lenny Rachitsky (@lennysan) June 5, 2024

From this experiment which Lenny Rachitsky conducted, most evaluators ranked the AI generated strategy to be a better representation (despite knowing that this was indeed generated by AI)

I recently read about applications where AI was able to provide real-time answers to questions asked by the interviewers essentially rigging the system.

I faced a similar situation while drafting an assignment that could help assess a product manager’s skills. It’s highly likely that the candidate is using some version of a ChatGPT or a Claude to help draft better answers. How do we then cut through the noise and understand their thinking process?

To answer this question myself, I’ve been documenting some internal meta-notes to help me do a better job at distinguishing candidates with interview hackers. These meta-notes are more oriented towards product managers and some of them could also apply to other domains.

Breaking the fourth wall

I sometimes subtly probe the candidates to go deeper. Sometimes, they break the ‘fourth wall’ and provide a spiky point of view.

Say, for example, you ask the candidate — ‘Tell me more about how you prioritise your time with an example?’.

The candidate might usually start with a project that they’ve taken up, how they’ve used certain frameworks, and how they had approached prioritisation that way. This is where the usual conversations go into, and perhaps that might be it.

But for some candidates, they might do more critical reflection on their own process, and even talk about places/scenarios where the specific prioritisation framework might not have worked. In reality, there are no blanket solutions.

Sometimes, through this exercise, a spiky point-of-view emerges. One which is rooted in their experience, and yet others can still disagree with it. It captures attention as it stands out in the sea of sameness, but does provide valuable signal that this candidate has great lessons rooted in practicality.

Trees and Branches

I’ve been able to identify top-notch talent by asking this — ‘What was the hardest problem you’ve encountered and how have you approached it?’. While the candidate starts narrating, I start using the metaphor of a tree to weave questions around their narration.

When the candidates go deep into one particular topic (say metrics), I zoom out a bit, and ask them about outcomes. I don’t want to know the details of how the leaf looks like, I just want to see the overall outline of the tree, the branches and the twigs.

Whenever the candidate goes too deep, I nudge them to go a bit broad. When they go too broad, I nudge them to go one level deep. While doing this, I also see if the candidate is having an holistic approach to problem solving. For example, if they’re building an electronic health record system, how are they thinking about the legal, data privacy, ethical implications of collecting patient phone numbers?

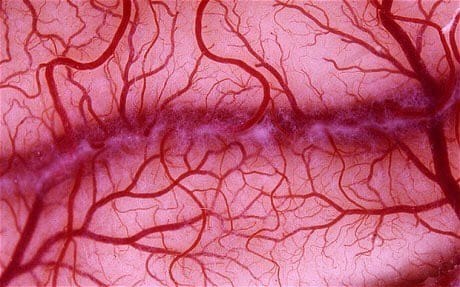

Not just trees, even blood vessels, tree roots, and even tree branches follow similar patterns. Branching is an efficient pattern in a lot of contexts, even for an act such as interviewing

Listening to respond

For listening skills, I give them constructive criticism at the end of the interview, and see how they respond. If they’re listening with an intention to learn more, then I see that as a good sign. If they’re listening to respond, or even worse: to defend, that’s a red flag.

Narratives on lived experiences

I also frame the questions slightly differently. Instead of asking them ‘How should a product manager involve the stakeholders?’, I reframe this as ‘Describe a challenging situation in the past involving difficult stakeholders, and how did you navigate this?’.

I’ve seen that the answers slightly shift from a theory to a lived experience. This is very difficult to fake, and the more interviews one conducts, we do get good at spotting fabricated narrations. They wouldn’t necessarily pass the smell test that way.

Stretching to extremes

Another interview technique I recently adopted involves stretching an idea to its extremes. When a candidate describes a decision they previously made, I extend the scenario to extreme conditions. For instance:

- What if the data is insufficient?

- What if there are no insights from interviews on this process?

- What if there is no clear roadmap?

By posing these hypothetical extremes, we can gain insight into the candidate’s internal decision-making model. This method mirrors a Socratic dialogue, primarily driven by ‘What if…’ questions.

The effectiveness of this technique lies in its ability to move beyond conventional responses. Many candidates are familiar with standard practices and may offer predictable answers when asked about them. However, critical thinking often emerge in response to extreme situations, outliers, and edge cases.

This approach helps identify candidates who can think critically and adaptively in unconventional scenarios and separate the ‘interview hackers’ out of the mix.

Subscribe to get future posts via email (or grab the RSS feed). 2-3 ideas every month across design and tech

2026

2025

- Legible and illegible tasks in organisations

- L2 Fat marker sketches

- Writing as moats for humans

- Beauty of second degree probes

- Read raw transcripts

- Boundary objects as the new prototypes

- One way door decisions

- Finished softwares should exist

- Essay Quality Ranker

- Export LLM conversations as snippets

- Flipping questions on its head

- Vibe writing maxims

- How I blog with Obsidian, Cloudflare, AstroJS, Github

- How I build greenfield apps with AI-assisted coding

- We have been scammed by the Gaussian distribution club

- Classify incentive problems into stag hunts, and prisoners dilemmas

- I was wrong about optimal stopping

- Thinking like a ship

- Hyperpersonalised N=1 learning

- New mediums for humans to complement superintelligence

- Maxims for AI assisted coding

- Personal Website Starter Kit

- Virtual bookshelves

- It's computational everything

- Public gardens, secret routes

- Git way of learning to code

- Kaomoji generator

- Style Transfer in AI writing

- Copy, Paste and Cite

- Understanding codebases without using code

- Vibe coding with Cursor

- Virtuoso Guide for Personal Memory Systems

- Writing in Future Past

- Publish Originally, Syndicate Elsewhere

- Poetic License of Design

- Idea in the shower, testing before breakfast

- Technology and regulation have a dance of ice and fire

- How I ship "stuff"

- Weekly TODO List on CLI

- Writing is thinking

- Song of Shapes, Words and Paths

- How do we absorb ideas better?

2024

- Read writers who operate

- Brew your ideas lazily

- Vibes

- Trees, Branches, Twigs and Leaves — Mental Models for Writing

- Compound Interest of Private Notes

- Conceptual Compression for LLMs

- Meta-analysis for contradictory research findings

- Beauty of Zettels

- Proof of work

- Gauging previous work of new joinees to the team

- Task management for product managers

- Stitching React and Rails together

- Exploring "smart connections" for note taking

- Deploying Home Cooked Apps with Rails

- Self Marketing

- Repetitive Copyprompting

- Questions to ask every decade

- Balancing work, time and focus

- Hyperlinks are like cashew nuts

- Brand treatments, Design Systems, Vibes

- How to spot human writing on the internet?

- Can a thought be an algorithm?

- Opportunity Harvesting

- How does AI affect UI?

- Everything is a prioritisation problem

- Now

- How I do product roasts

- The Modern Startup Stack

- In-person vision transmission

- How might we help children invent for social good?

- The meeting before the meeting

- Design that's so bad it's actually good

- Breaking the fourth wall of an interview

- Obsessing over personal websites

- Convert v0.dev React to Rails ViewComponents

- English is the hot new programming language

- Better way to think about conflicts

- The role of taste in building products

- World's most ancient public health problem

- Dear enterprises, we're tired of your subscriptions

- Products need not be user centered

- Pluginisation of Modern Software

- Let's make every work 'strategic'

- Making Nielsen's heuristics more digestible

- Startups are a fertile ground for risk taking

- Insights are not just a salad of facts

- Minimum Lovable Product

2023

- Methods are lifejackets not straight jackets

- How to arrive at on-brand colours?

- Minto principle for writing memos

- Importance of Why

- Quality Ideas Trump Execution

- How to hire a personal doctor

- Why I prefer indie softwares

- Use code only if no code fails

- Personal Observation Techniques

- Design is a confusing word

- A Primer to Service Design Blueprints

- Rapid Journey Prototyping

- Directory Structure Visualizer

- AI git commits

- Do's and Don'ts of User Research

- Design Manifesto

- Complex project management for product

2022

2020

- Future of Ageing with Mehdi Yacoubi

- Future of Equity with Ludovick Peters

- Future of Tacit knowledge with Celeste Volpi

- Future of Mental Health with Kavya Rao

- Future of Rural Innovation with Thabiso Blak Mashaba

- Future of unschooling with Che Vanni

- Future of work with Laetitia Vitaud

- How might we prevent acquired infections in hospitals?