How does AI affect UI?

Shreyas Prakash

Intended Audience — For conversational UI designers in healthcare industry curious about various UI affordances/design patterns in vogue right now

Our online conversations have been increasingly life-like, but yet life-less at the same time. The UI of apps have become more conversational and chat-like in nature. Not just apps, even websites have their own chat-like interfaces on the side. And all of them face the peril of being infested by bot-like avatars.

As Maggie Appleton points this out, we have more keyword-stuffing “content creators”, and more algorithmically manipulated junk.

It's like a dark forest that seems eerily devoid of human life – all the living creatures are hidden beneath the ground or up in trees. If they reveal themselves, they risk being attacked by automated predators.Humans who want to engage in informal, unoptimised, personal interactions have to hide in closed spaces like invite-only Slack channels, Discord groups, email newsletters, small-scale blogs, and digital gardens. Or make themselves illegible and algorithmically incoherent in public venues.

Bots are not necessary bad or evil, per se. It’s just that the conversations enacted by them often feel soulless. Now, there have been instances where chatbot conversations have been more and more life-like.

Character-AI, an AI virtual chatbot service has had several reports of users getting addicted to the virtual bots, even choosing to engage with them over their partners

In this environment, it’s interesting to see the design patterns adopted by the industry as we continue to use AI for conversations. Here are some design patterns that have caught my attention:

Offering preset texts

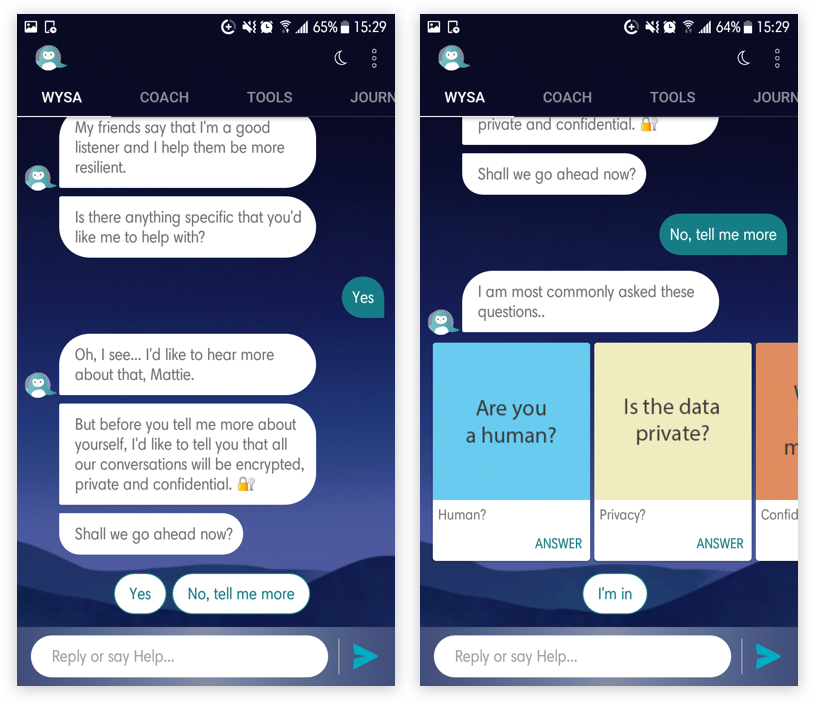

Screenshots from Wysa

The app offers pre-populated short answer suggestions, and suggested options for larger, more informational outputs with minimum user input required. Offering such pre-set text helps direct conversations and limit chances of breaking the conversational flow.

Overcoming gender-bias in voice agents

There is a disproportionate use of feminine names and voices when it comes to voice assistants.

Over the last decade or so, the creators of popular AI voice assistants like Siri, Alexa and Google Assistant have come under fire for their disproportionate use of feminine names and voices, and the harmful gender stereotypes this decision perpetuates. Smart devices with feminine voices have been shown to reinforce commonly held gender biases that women are subservient and tolerant of poor treatment, and can be fertile ground for unchecked verbal abuse and sexual harassment.

Recently came across the Genderless voice initiative, that aims to reduce this gender disparity.

Q is a genderless voice assistant which is able to generate voice which is gender neutral. It would not only reflect diversity of our world, but also reduce the gender bias.

Q, Genderless Voice Assistant

Pre-answer, Post-answer

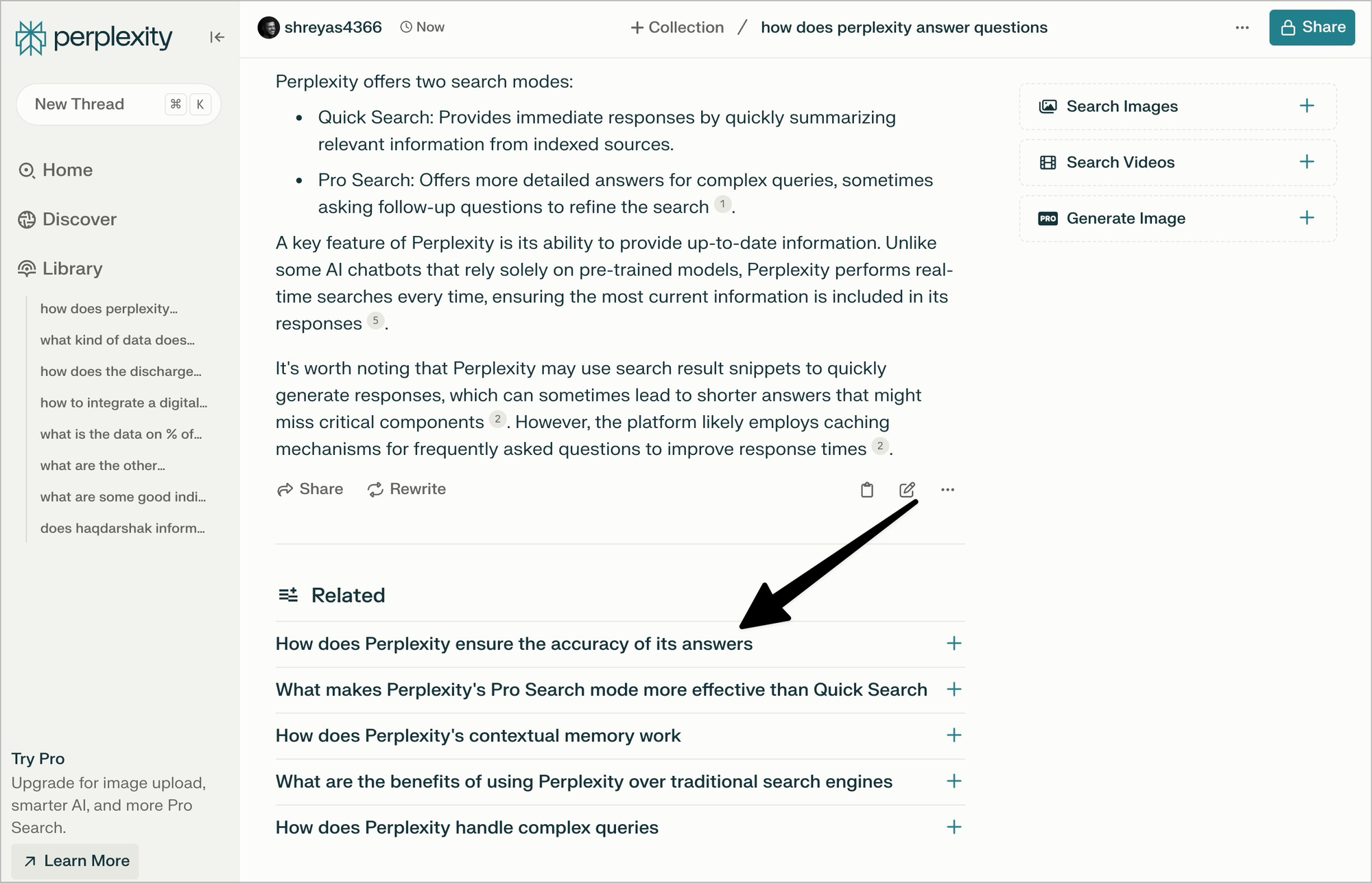

While chatbots continue to answer questions, it’s important to think about the entire journey of a question. What happens before a question is answered? What happens after a question is answered?

In the above example, Perplexity AI continues to provide cues for digging deeper into the rabbit hole, by providing suggested questions which the user can ask after having answered the question. This helps create a flywheel effect for the user curious about exploring a topic.

Handoff human

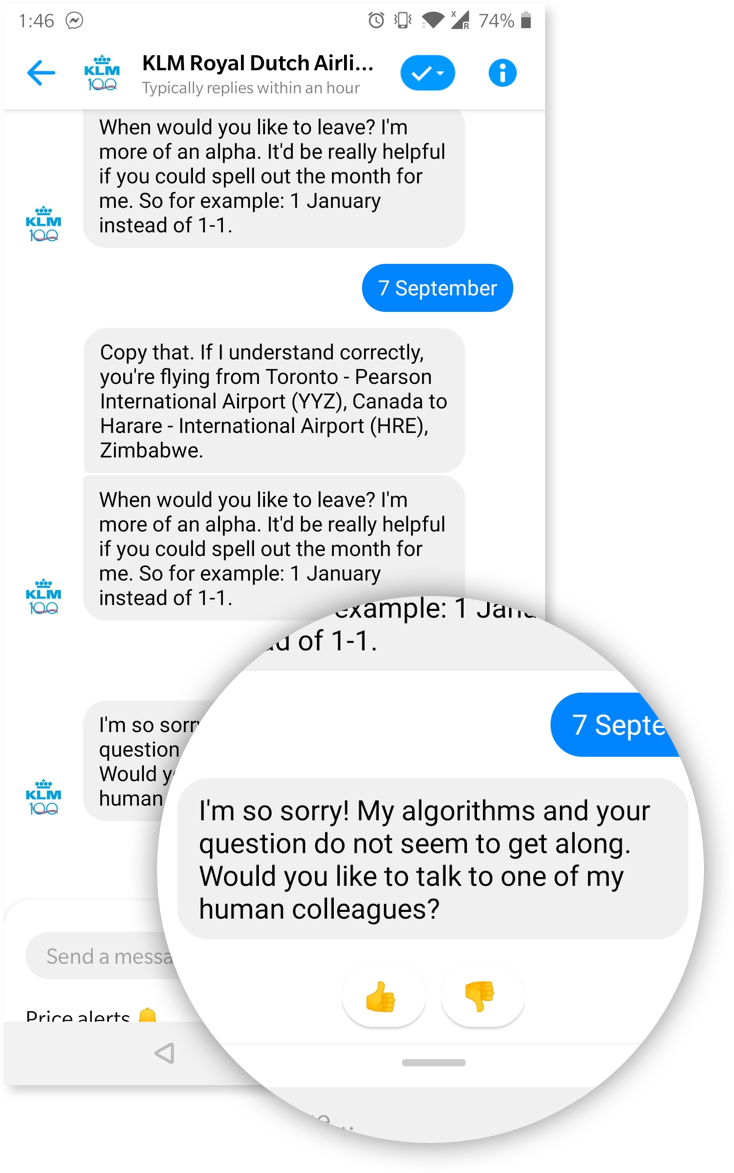

When AI related conversational threads don’t go in the expected direction, and if it crosses a certain threshold of steering, the AI directs the conversation to a human expert to take it forward.

Screenshot from KLM app

In this case, the airline chatbot (KLM) allows the user to switch to direct human interaction when it is unable to complete the task as requested.

Obviously prioritising a hand-off to human agents reduces the efficiencies that automation brings to business processes. But there will always be categories of complex issues that fall outside of an AI’s capabilities, which human agents are better able to solve. Beyond complexity, where a relationship requires empathy, passion, emotion, or another form of authentic human connection, simulating this via AI is still a greater challenge than simply employing human agents to make that connection with the user.

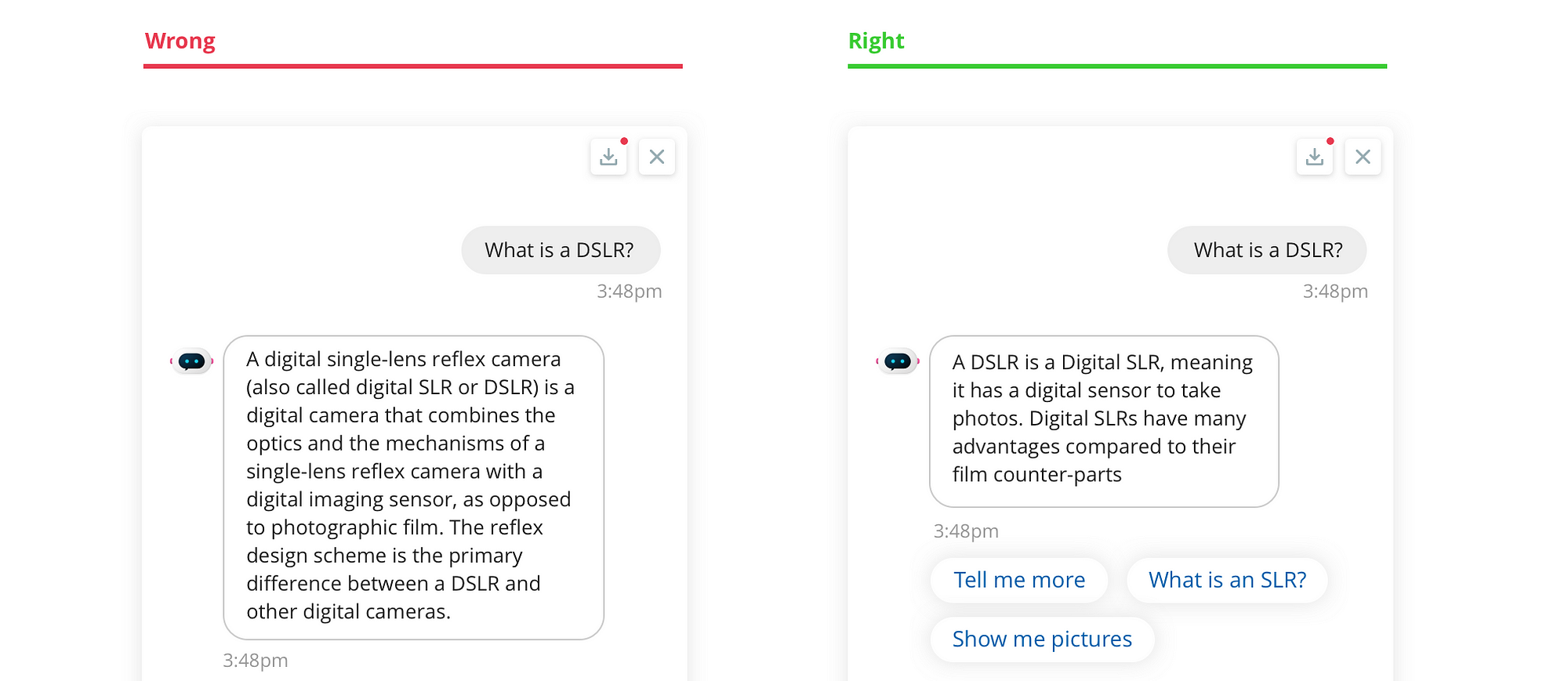

Reducing the thickness of the ‘technical wall’

In healthcare chatbots, most of the answers are quite dense. They might sound as if they’ve been extracted from the first paragraph of a relevant wikipedia article.

Conversational AI agents are trying their best to speak as a friend, and not use dense prose.

The first generation of conversational AI agents were trained in la langue, and not la parole. We see more instances of these agents using the colloquial common tongue, and making themselves sound more human.

A friend helps you by telling you what they understand by it. This is the language used by AI for helping humans understand and comprehend better

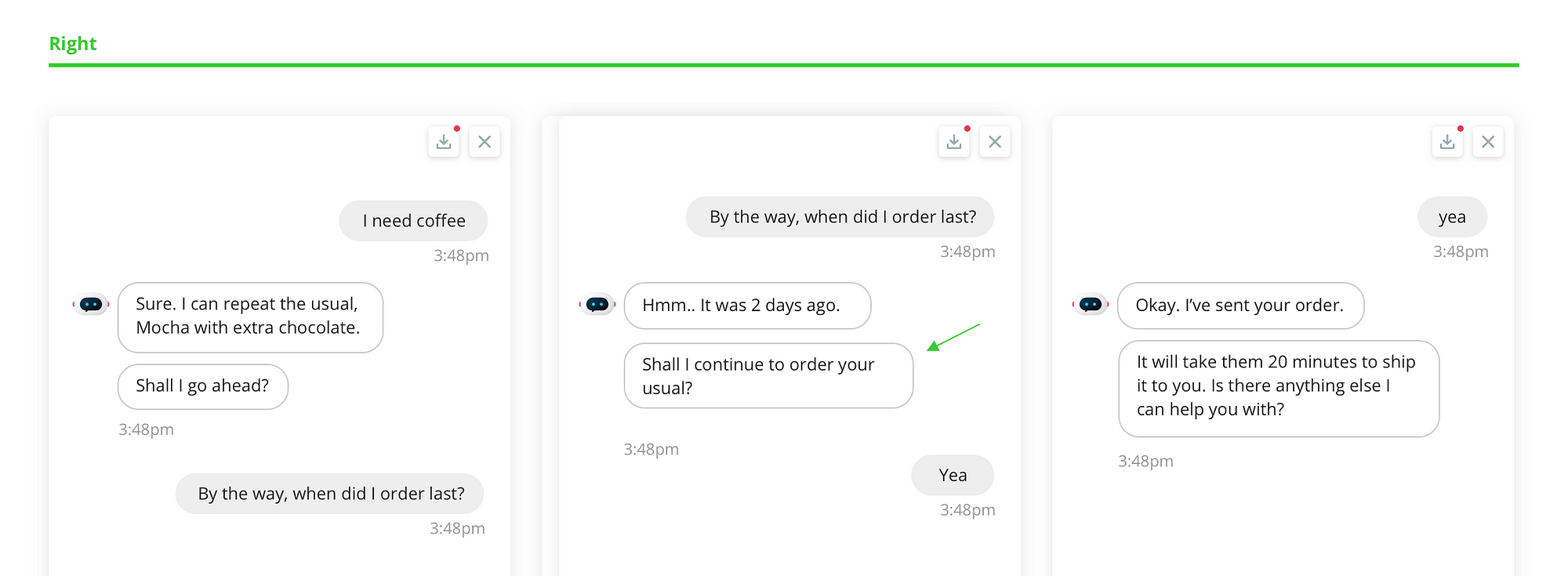

Sidetracks and maintracks

Chatbots usually have an objective. Whenever users slightly get off-track or derailed, it’s important to get them back into the core ‘job-to-be-done’. Why did they come here for? What purpose does this chatbot solve? In the above example, you can see how the agent brings them back to the key function when the user asks multiple topics on different threads.

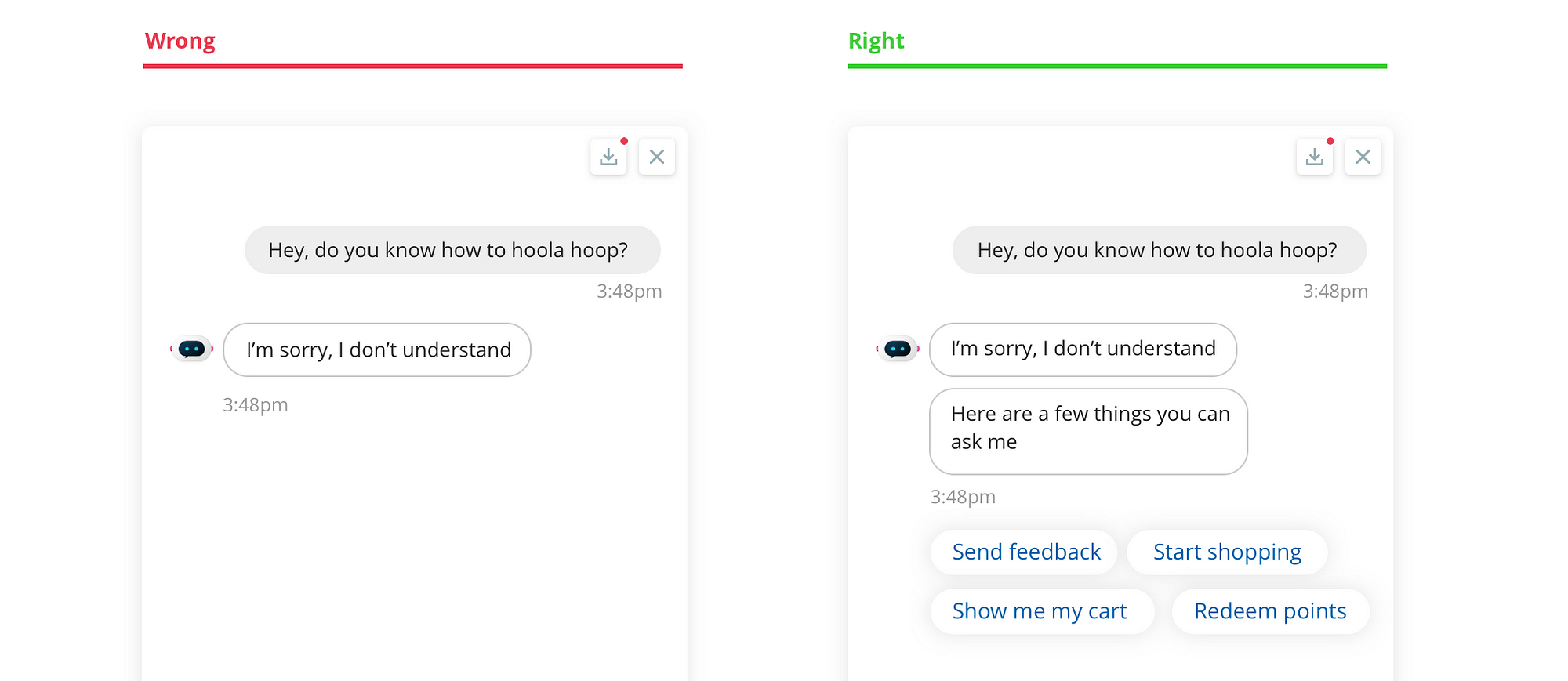

Avoiding dead ends

It’s important to also design keeping continuity in mind. When we ask the chatbot a question which it doesn’t have an answer to, and it replies with an ‘I don’t know…’. It just ends there in an awkward manner, and there is higher friction to restart the conversation again.

Most people will just give up when chatbots or assistants say something similar to “I’m sorry, I’m still learning”, “I’m not sure I can help with that”.

It is in these situations that the user and the business need the conversation to keep going. Here’s how you do it.

As you can see here, the conversation extends despite the chatbot not having an answer to the user’s immediate question. More starter text is provided to overcome the friction.

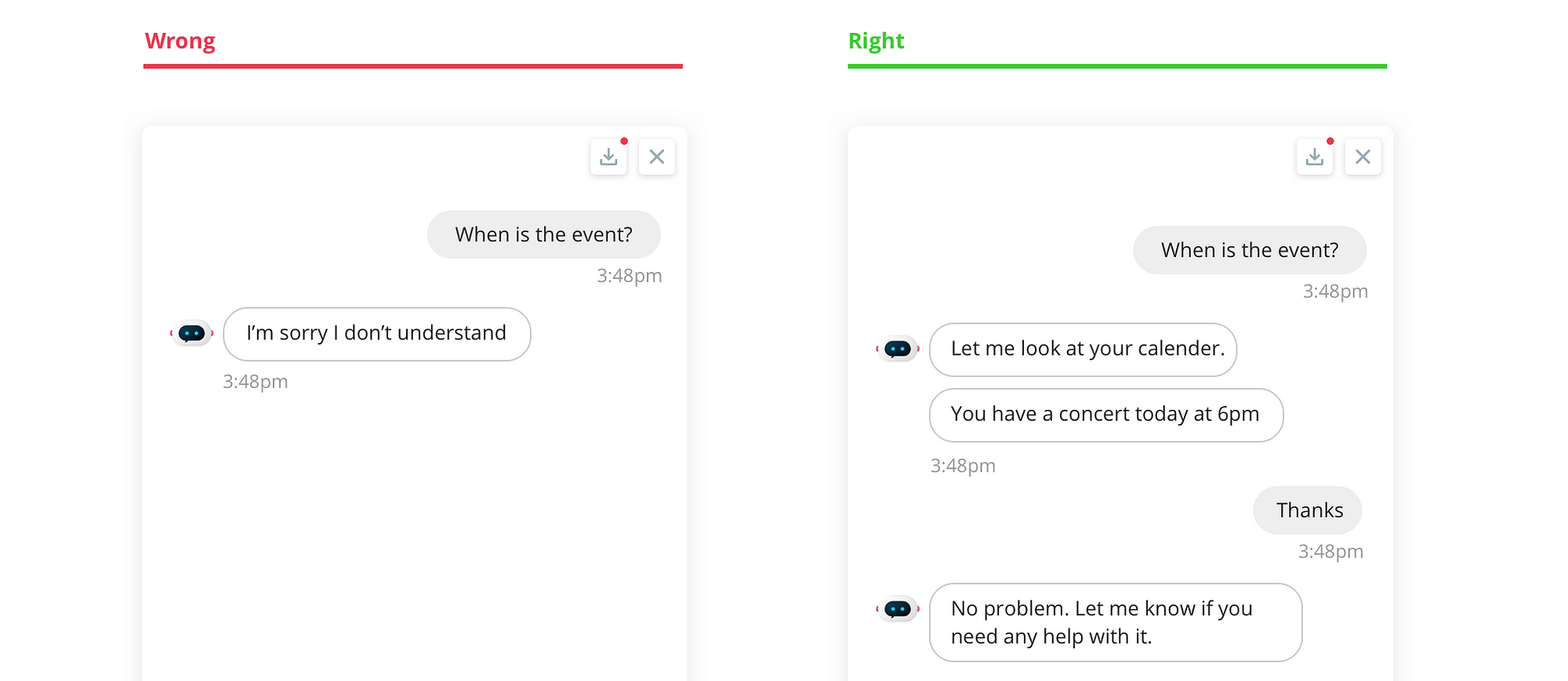

Conversational implicature

When users ask a question, what is said, and what is implied might be two different things. It’s important for the chatbot to get the complete context before answering such questions.

If we have a medical topic being shared, and there is an immediate question asked, then the ai should pull in both the previous answer as well as the question to understand the implied question (hidden between the lines)

It is common knowledge that we humans don’t always mean what we say, or say what we mean. We use metaphors or form sentences in the context of other events. For another human being, it’s a natural thing to process this sort of conversations. Of course we “know” what the other person wants. We don’t need people to use a specific set of words.

This is called as conversational implicature.

We see this a lot in customer service. Customers who call about a broken internet connection complain about the same problem in a different way. “I’m not able to get online” and, “I think my internet is broken” is both indicative of “a problem” and the problem is “lack of internet”. This is why you need to write alternate queries for the same piece of information.

Thinking about what they mean, why they said it and what they actually said can help you give a holistic user experience.

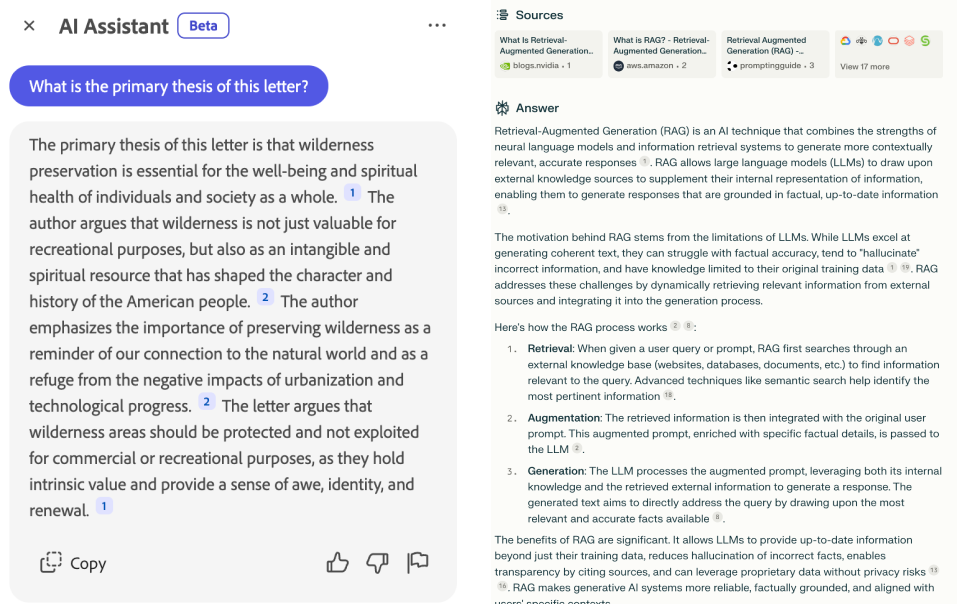

Citations for trust

Citations help users verify that the referenced material is relevant and valid. This way users don’t cede control over the accuracy of their content to the AI’s search engine.

The adoption of RAG (Retrieval Augmented Generation) has helped dramatically improve the AI’s response by getting really good at the needle-in-the-haystack problem.

Now, instead of simply summarizing a topic or a primary source, AI can collect information from multiple sources and aggregate it into a single response. Citations help users trace the information contained in a response back to its original material.

And there is more to the list!

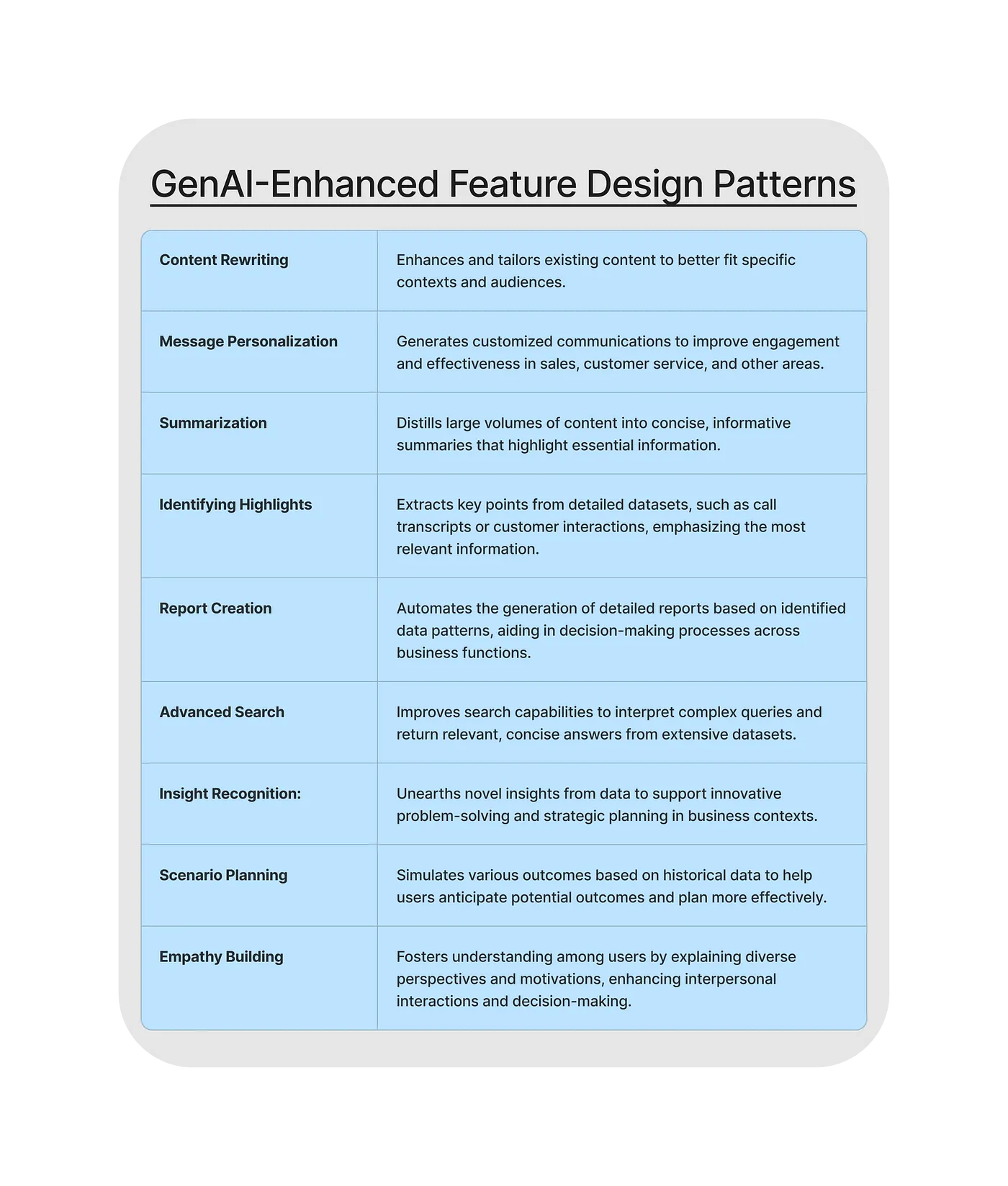

Table of the nine GenAI-Enhanced Design Patterns

These are the nine broad design patterns that have proliferated in the AI landscape over the past year.

Subscribe to get future posts via email (or grab the RSS feed). 2-3 ideas every month across design and tech

2026

2025

- Legible and illegible tasks in organisations

- L2 Fat marker sketches

- Writing as moats for humans

- Beauty of second degree probes

- Read raw transcripts

- Boundary objects as the new prototypes

- One way door decisions

- Finished softwares should exist

- Essay Quality Ranker

- Export LLM conversations as snippets

- Flipping questions on its head

- Vibe writing maxims

- How I blog with Obsidian, Cloudflare, AstroJS, Github

- How I build greenfield apps with AI-assisted coding

- We have been scammed by the Gaussian distribution club

- Classify incentive problems into stag hunts, and prisoners dilemmas

- I was wrong about optimal stopping

- Thinking like a ship

- Hyperpersonalised N=1 learning

- New mediums for humans to complement superintelligence

- Maxims for AI assisted coding

- Personal Website Starter Kit

- Virtual bookshelves

- It's computational everything

- Public gardens, secret routes

- Git way of learning to code

- Kaomoji generator

- Style Transfer in AI writing

- Copy, Paste and Cite

- Understanding codebases without using code

- Vibe coding with Cursor

- Virtuoso Guide for Personal Memory Systems

- Writing in Future Past

- Publish Originally, Syndicate Elsewhere

- Poetic License of Design

- Idea in the shower, testing before breakfast

- Technology and regulation have a dance of ice and fire

- How I ship "stuff"

- Weekly TODO List on CLI

- Writing is thinking

- Song of Shapes, Words and Paths

- How do we absorb ideas better?

2024

- Read writers who operate

- Brew your ideas lazily

- Vibes

- Trees, Branches, Twigs and Leaves — Mental Models for Writing

- Compound Interest of Private Notes

- Conceptual Compression for LLMs

- Meta-analysis for contradictory research findings

- Beauty of Zettels

- Proof of work

- Gauging previous work of new joinees to the team

- Task management for product managers

- Stitching React and Rails together

- Exploring "smart connections" for note taking

- Deploying Home Cooked Apps with Rails

- Self Marketing

- Repetitive Copyprompting

- Questions to ask every decade

- Balancing work, time and focus

- Hyperlinks are like cashew nuts

- Brand treatments, Design Systems, Vibes

- How to spot human writing on the internet?

- Can a thought be an algorithm?

- Opportunity Harvesting

- How does AI affect UI?

- Everything is a prioritisation problem

- Now

- How I do product roasts

- The Modern Startup Stack

- In-person vision transmission

- How might we help children invent for social good?

- The meeting before the meeting

- Design that's so bad it's actually good

- Breaking the fourth wall of an interview

- Obsessing over personal websites

- Convert v0.dev React to Rails ViewComponents

- English is the hot new programming language

- Better way to think about conflicts

- The role of taste in building products

- World's most ancient public health problem

- Dear enterprises, we're tired of your subscriptions

- Products need not be user centered

- Pluginisation of Modern Software

- Let's make every work 'strategic'

- Making Nielsen's heuristics more digestible

- Startups are a fertile ground for risk taking

- Insights are not just a salad of facts

- Minimum Lovable Product

2023

- Methods are lifejackets not straight jackets

- How to arrive at on-brand colours?

- Minto principle for writing memos

- Importance of Why

- Quality Ideas Trump Execution

- How to hire a personal doctor

- Why I prefer indie softwares

- Use code only if no code fails

- Personal Observation Techniques

- Design is a confusing word

- A Primer to Service Design Blueprints

- Rapid Journey Prototyping

- Directory Structure Visualizer

- AI git commits

- Do's and Don'ts of User Research

- Design Manifesto

- Complex project management for product

2022

2020

- Future of Ageing with Mehdi Yacoubi

- Future of Equity with Ludovick Peters

- Future of Tacit knowledge with Celeste Volpi

- Future of Mental Health with Kavya Rao

- Future of Rural Innovation with Thabiso Blak Mashaba

- Future of unschooling with Che Vanni

- Future of work with Laetitia Vitaud

- How might we prevent acquired infections in hospitals?