How to spot human writing on the internet?

Shreyas Prakash

In the classic Turing Test, a computer is considered intelligent if it can convince a human that it’s another human in a conversation. At that time, human-generated content dominated the internet.

But that was a decade ago. Today, the landscape has shifted dramatically. AI-generated content now rivals, and in some cases outpaces, human-created material.

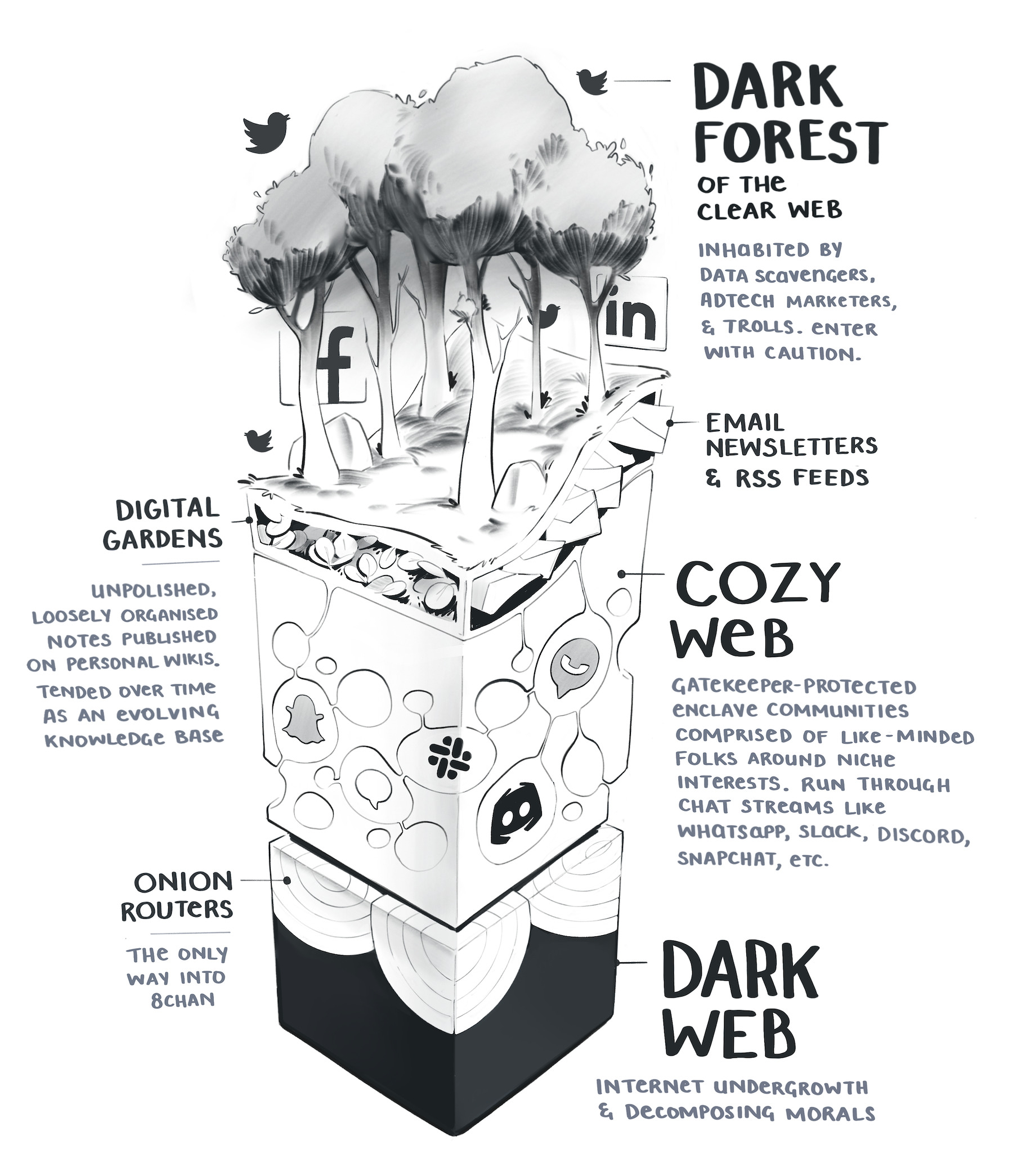

According to the ‘expanding dark forest’ theory—

4chan proposed years ago: that most of the internet is “empty and devoid of people” and has been taken over by artificial intelligence. A milder version of this theory is simply that we’re overrun . Most of us take that for granted at this point.

As this dark forest expands, we will become deeply sceptical of one another’s realness.

The dark forest theory of the web points to the increasingly life-like but life-less state of being online. Most open and publicly available spaces on the web are overrun with bots, advertisers, trolls, data scrapers, clickbait, keyword-stuffing “content creators,” and algorithmically manipulated junk.

Souce: Maggie Appleton

While the web keeps getting infested with this content, it’s a good junction for us to think of the absolute reverse of the Turing test experiment — In this flood of bot-generated word salads, how human are you?

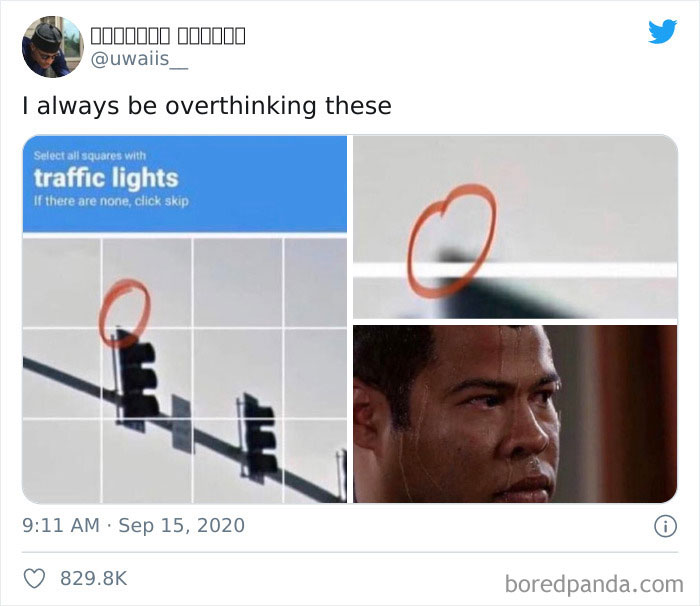

In a reverse turing test, instead of a machine trying to pass as human, a human attempts to pass as a machine. This can involve humans trying to convince an AI or a panel of robots that they are one of them, often by mimicking machine-like responses or behaviors. CAPTCHA is a very traditional example of a reverse turing test which is used in websites to distinguish human users from bots.

CAPTCHA, a standard example of a reverse turing test.

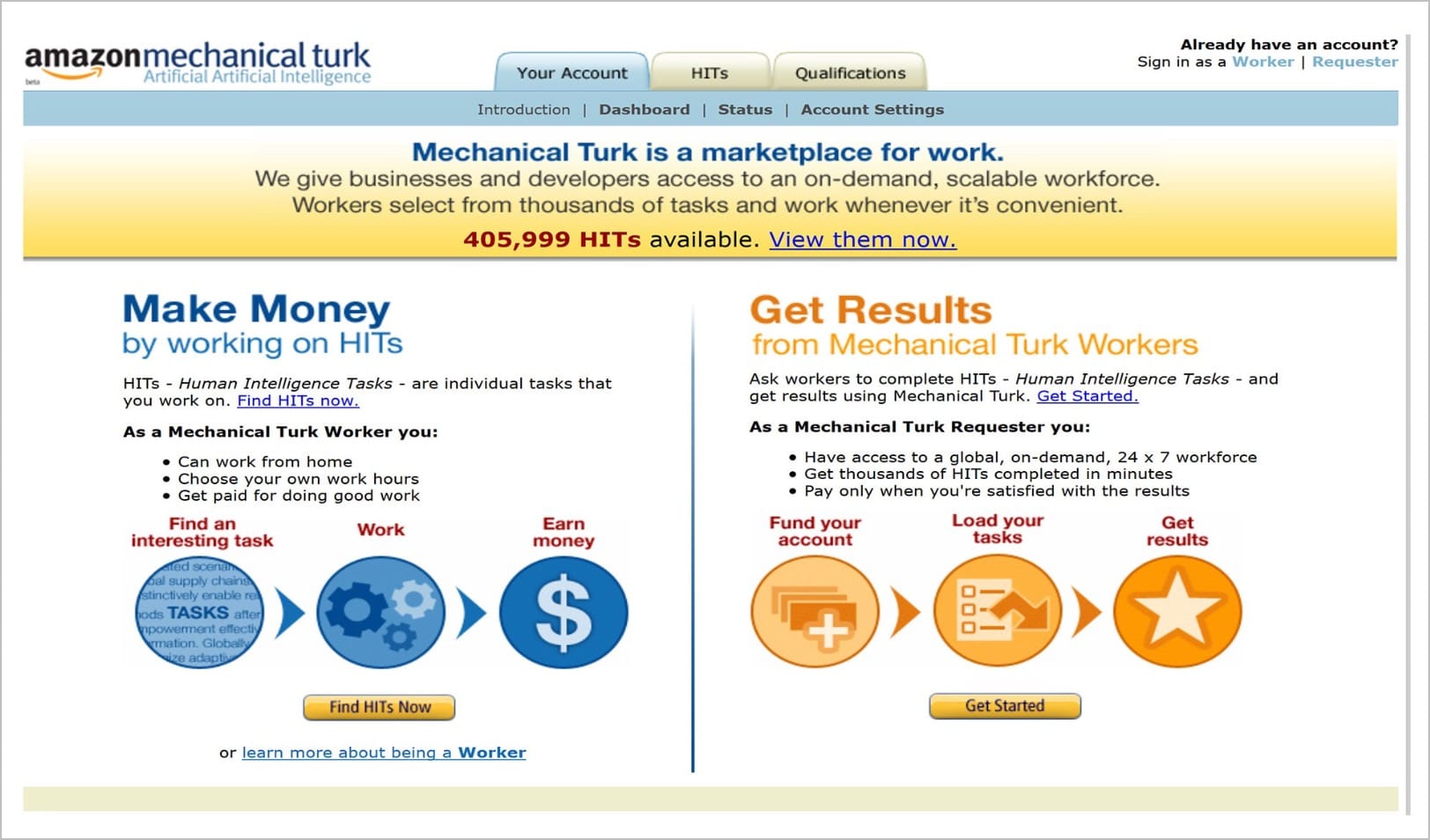

Mechanical Turks are another example. It’s a crowdsourcing internet marketplace that enables individuals and businesses (Requesters) to coordinate the use of human workers (Workers/Turkers) to perform tasks that computers are currently unable to do.

You also have more recent examples in which one of the NPC characters in a game are being impersonated by a human, and the rest of the AI characters need to figure out who amongst them is a human being. This video is both eerie and fascinating at the same time:

A group with the most advanced AIs of the world try to figure out who among them is the human.

How might we (as humans) distinguish ourselves from that of an LLM?

At this point in time, bot-generated writing are relatively easy to detect. Wonky analogies, weird sentence structures, repetition of common phrases, and some psuedo-profound bullshit. For example: “Wholeness quiets infinite phenomena” is a total “bullshit”. It means nothing, and people can still rank the phrase as profound. If you’re still not convinced, try searching Google for common terms. You will find SEO optimizer bros pump out billions of perfectly coherent but predictably dull informational articles on every longtail keyword combination under the sun.

However, as time passes, the ability for humans to distinguish humans from AI-generated content might get increasingly harder.

This is what we’re competing against. And as we continue to read more such articles, we can just ‘smell’ an AI-generated article from a distance. Crawl the web long enough, and you will find certain words being used repeatedly. ‘Elevate’, and ‘delve’ are perhaps the worst culprits, with the former often appearing in titles, headings and subheadings.

Language models also have this peculiar habit of making everything seem like a B+ college essay.

‘When it comes to…’.

‘Remember that…’.

‘In conclusion…’.

‘Let’s dive in to the world of…’.

Remarkable. Breakthrough. State-of-the-art. The rapid pace of development. Unprecendented. Rich tapestry. Revolutionary. Cutting-edge. Push the boundaries. Transformative power. Significantly enhances..

Apart from some favourite phrases, Language models also have some favourite words.

Explore. Captivate. Tapestry. Leverage. Embrace. Resonate. Dynamic. Delve. Elevate. And so on.

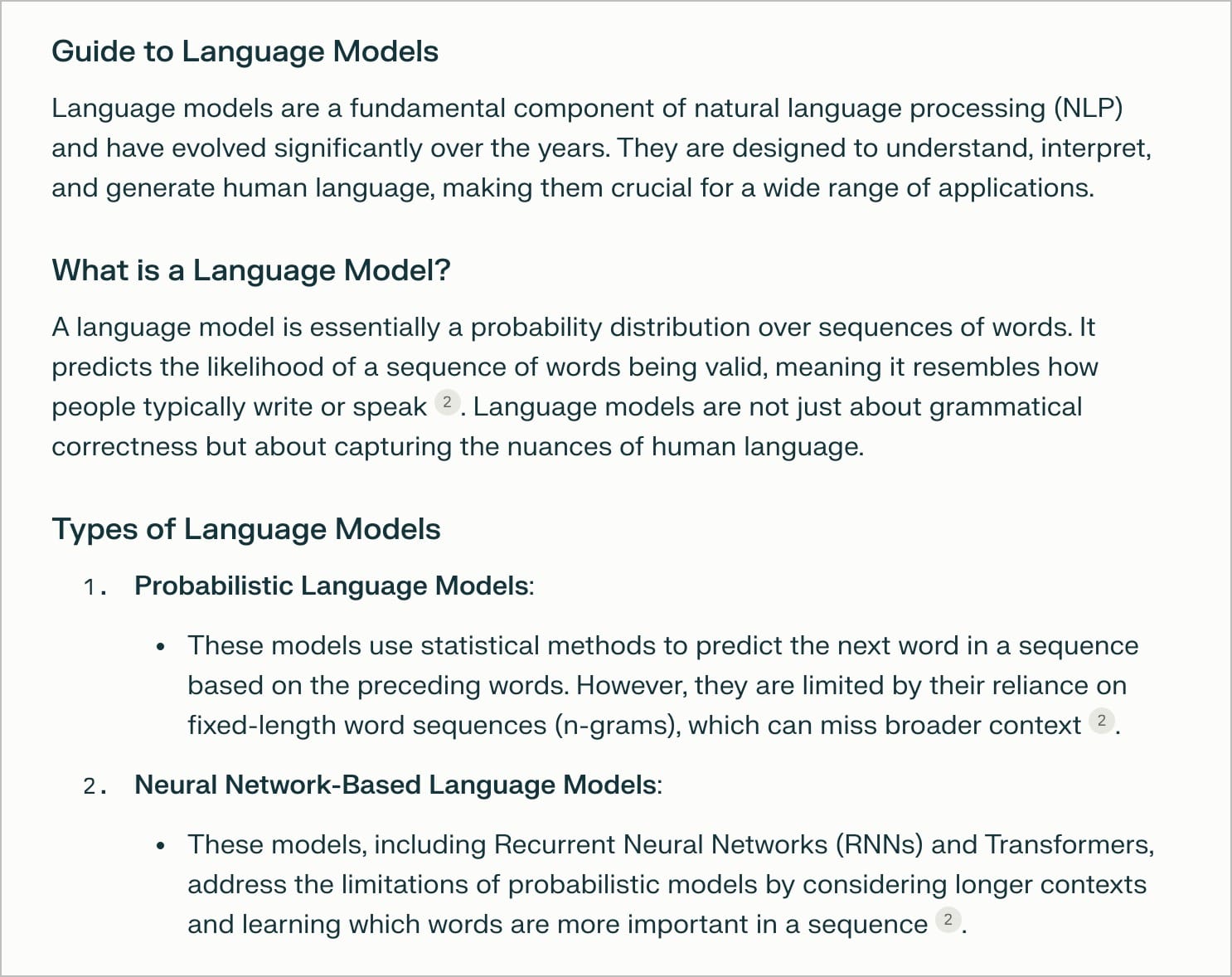

The final giveaway that a piece of content is a copy and paste job from a generative AI tool is in the formatting. I asked ChatGPT recently to write a guide on Language models, and this is what it came up with:

GPT4 generated output from Perplexity

Each item is often highlighted in bold, and then ChatGPT likes to throw in a colon to expand upon each point. There’s nothing inherently wrong with presenting lists in this fashion, but it has become its signature style and can therefore be easily identified as AI content.

Another way to pass the reverse turing test is to communicate intentionality. One of the best ways to prove you’re not a predictive language model is to demonstrate critical and sophisticated thinking, You’re not just mashing random words together.

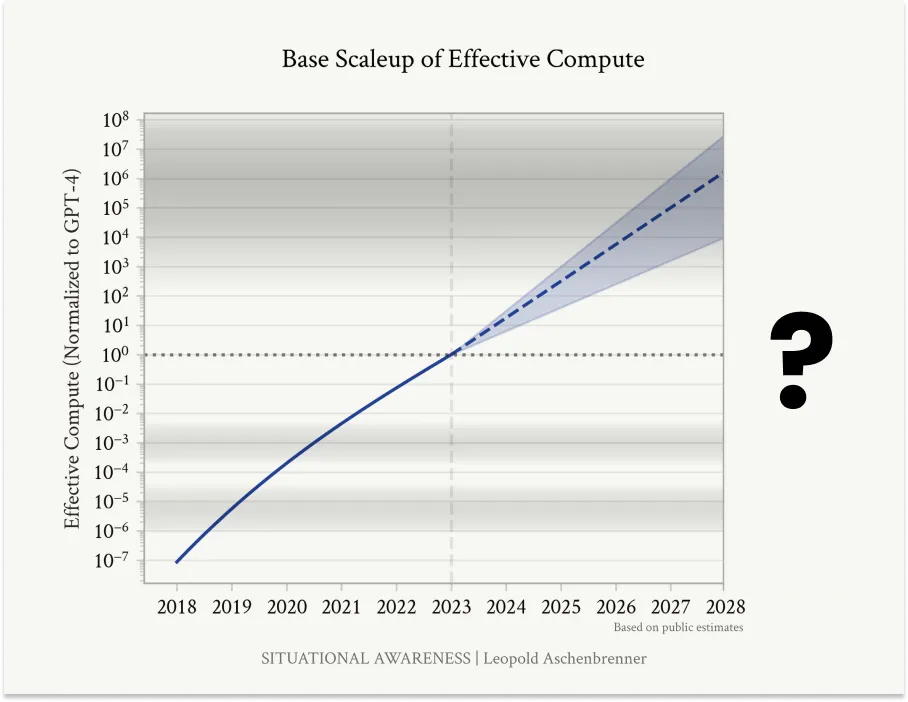

Humans can also humanise their content even further is by making it personal. In the essay titled The Goldilocks Zone, by Packy McCornick, he argues that so far we’ve continued to think of AI progress in terms of OOMs of Effective Compute. It’s a great measure, and can help us with a lot of useful things.

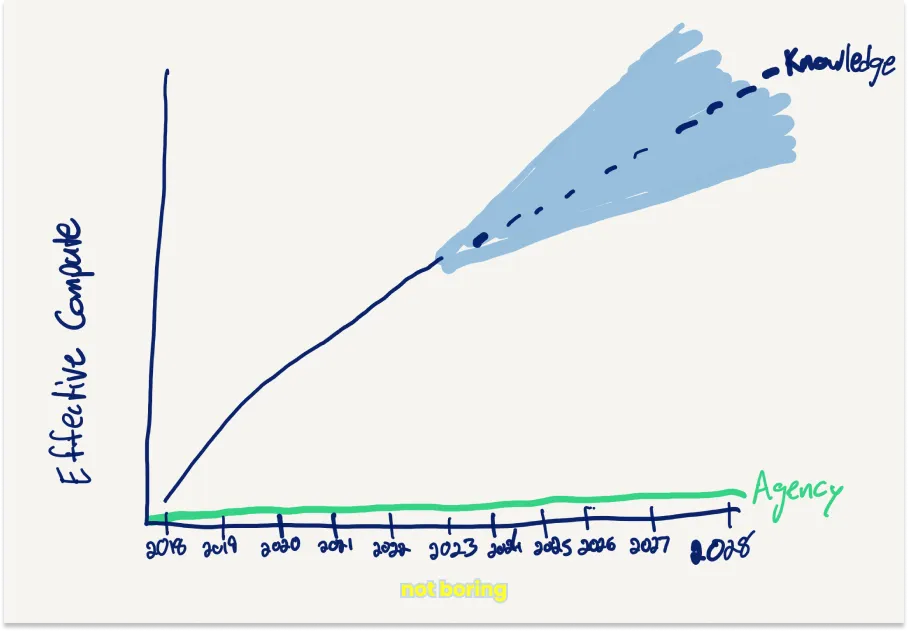

Continuing to scale knowledge won’t magically scale agency.

Packy argues that even if the OOMs of Effective Compute increases linearly (and we should trust the curve here), we should disagree on the mappings. Language models don’t have agency and drive. Continuing to scale knowledge wouldn’t magically scale agency too. They’re on a different axis altogether.

Coming back to our original topic, sprinkling a personal narrative would be our best bet on the short term to differentiate our writing from predictive language models. As the AIs are not scaling up their ability to have agency and drive (not yet).

To summarise (this might sound like an AI-generated closing phrase, but I promise, I’m a human being), these are some tactics humans could adopt to distinguish themselves from AI while writing:

- Hipsterism (Packy McCornick and Tim Urban’s visuals are great examples of non-conformism incorporating pencil sketches, and handwritten annotations)

- Recency bias (some language models might have a knowledge cutoff)

- Referencing obscure concepts. Friedrich Nietzsche’s writing seems more Nietzschean because of his use of —übermensch, ressentiment, herd, dionysian/apollonian etc. Michel Foucault’s writing sounds more Foucaultian because of his use of archaeology of knowledge, genealogy, biopower, and panopticism etc.

- Referencing friends who are real but not famous

- Niche interests (nootropics, jhanas, nondual meditation, alexander technique, artisanal discourse, metta, end time-theft, gurdjieff, zuangzhi, flat pattern drafting, parent figure protocol etc (you get the drift))

- Increasing reliance on neologisms, protologisms, niche jargons, euphemism emojis, unusual phrases, ingroup dialects, and memes-of-the-moment.

Examples of neologisms, and protologisms: lingo used by Google engineers that may not be widely known outside the company could be considered protologisms within that specific context

- Referencing recent events you might have attended, in-person gatherings etc. Your current social context acts as a differentiator. LLMs are predominantly trained on the generalised views of a majority English-speaking, western population who have written a lot on Reddit and lived between 1900 and 2023. As anthropologists would like to term it, these are Western Educated Industrialised Rich Democratic (WEIRD) Societies, their opinions and perspectives. This clearly does not represent all human cultures and languages and ways of being.

- Last but not least, referencing personal events, narratives and lived experiences.

Communicating your drive and personal narrative wherever possible.

Language models don’t have that, yet.

Subscribe to get future posts via email (or grab the RSS feed). 2-3 ideas every month across design and tech

2026

2025

- Legible and illegible tasks in organisations

- L2 Fat marker sketches

- Writing as moats for humans

- Beauty of second degree probes

- Read raw transcripts

- Boundary objects as the new prototypes

- One way door decisions

- Finished softwares should exist

- Essay Quality Ranker

- Export LLM conversations as snippets

- Flipping questions on its head

- Vibe writing maxims

- How I blog with Obsidian, Cloudflare, AstroJS, Github

- How I build greenfield apps with AI-assisted coding

- We have been scammed by the Gaussian distribution club

- Classify incentive problems into stag hunts, and prisoners dilemmas

- I was wrong about optimal stopping

- Thinking like a ship

- Hyperpersonalised N=1 learning

- New mediums for humans to complement superintelligence

- Maxims for AI assisted coding

- Personal Website Starter Kit

- Virtual bookshelves

- It's computational everything

- Public gardens, secret routes

- Git way of learning to code

- Kaomoji generator

- Style Transfer in AI writing

- Copy, Paste and Cite

- Understanding codebases without using code

- Vibe coding with Cursor

- Virtuoso Guide for Personal Memory Systems

- Writing in Future Past

- Publish Originally, Syndicate Elsewhere

- Poetic License of Design

- Idea in the shower, testing before breakfast

- Technology and regulation have a dance of ice and fire

- How I ship "stuff"

- Weekly TODO List on CLI

- Writing is thinking

- Song of Shapes, Words and Paths

- How do we absorb ideas better?

2024

- Read writers who operate

- Brew your ideas lazily

- Vibes

- Trees, Branches, Twigs and Leaves — Mental Models for Writing

- Compound Interest of Private Notes

- Conceptual Compression for LLMs

- Meta-analysis for contradictory research findings

- Beauty of Zettels

- Proof of work

- Gauging previous work of new joinees to the team

- Task management for product managers

- Stitching React and Rails together

- Exploring "smart connections" for note taking

- Deploying Home Cooked Apps with Rails

- Self Marketing

- Repetitive Copyprompting

- Questions to ask every decade

- Balancing work, time and focus

- Hyperlinks are like cashew nuts

- Brand treatments, Design Systems, Vibes

- How to spot human writing on the internet?

- Can a thought be an algorithm?

- Opportunity Harvesting

- How does AI affect UI?

- Everything is a prioritisation problem

- Now

- How I do product roasts

- The Modern Startup Stack

- In-person vision transmission

- How might we help children invent for social good?

- The meeting before the meeting

- Design that's so bad it's actually good

- Breaking the fourth wall of an interview

- Obsessing over personal websites

- Convert v0.dev React to Rails ViewComponents

- English is the hot new programming language

- Better way to think about conflicts

- The role of taste in building products

- World's most ancient public health problem

- Dear enterprises, we're tired of your subscriptions

- Products need not be user centered

- Pluginisation of Modern Software

- Let's make every work 'strategic'

- Making Nielsen's heuristics more digestible

- Startups are a fertile ground for risk taking

- Insights are not just a salad of facts

- Minimum Lovable Product

2023

- Methods are lifejackets not straight jackets

- How to arrive at on-brand colours?

- Minto principle for writing memos

- Importance of Why

- Quality Ideas Trump Execution

- How to hire a personal doctor

- Why I prefer indie softwares

- Use code only if no code fails

- Personal Observation Techniques

- Design is a confusing word

- A Primer to Service Design Blueprints

- Rapid Journey Prototyping

- Directory Structure Visualizer

- AI git commits

- Do's and Don'ts of User Research

- Design Manifesto

- Complex project management for product

2022

2020

- Future of Ageing with Mehdi Yacoubi

- Future of Equity with Ludovick Peters

- Future of Tacit knowledge with Celeste Volpi

- Future of Mental Health with Kavya Rao

- Future of Rural Innovation with Thabiso Blak Mashaba

- Future of unschooling with Che Vanni

- Future of work with Laetitia Vitaud

- How might we prevent acquired infections in hospitals?